Abstract Link to heading

Memory forensics is a huge help when performing an investigation and during incident response. Collecting memory images and analyzing them at scale is a challenge.

It is crucial to have the capability of examining memory images on storage platforms other than traditional file systems. With the emergence of cloud technologies, new forms of storage known as object storage have emerged. Enabling memory analysis on object storage provides exciting opportunities for innovation and advancement.

In this article, we will go through the journey of making the volatility3 framework compatible with s3 object-storage to perform memory analysis over the network. Also, the reader will discover how this new capability can and will be applied to the VolWeb 2.0 project which is still in developpement.

Disclaimer : All of the information about the volatility3 framework given in this blogpost are from my own understanding of the framework and of the project documentation1. Feel free to contact me at felix.guyard@forensicxlab.com to correct any mistake made in the explanations.

Object-storage Link to heading

Object storage is a type of data storage architecture that manages data as objects, rather than as blocks or files like traditional storage systems. In object storage, a unique identifier is assigned to each piece of data and stored in a flat address space. These objects can contain not only the data itself, but also metadata and other attributes that provide additional information about the object. One of the key features of object storage is its scalability and it is ideal for storing big data sets like digital forensics evidence for example.

Support of object-storage in volatility3 Link to heading

Volatility3 state of the art Link to heading

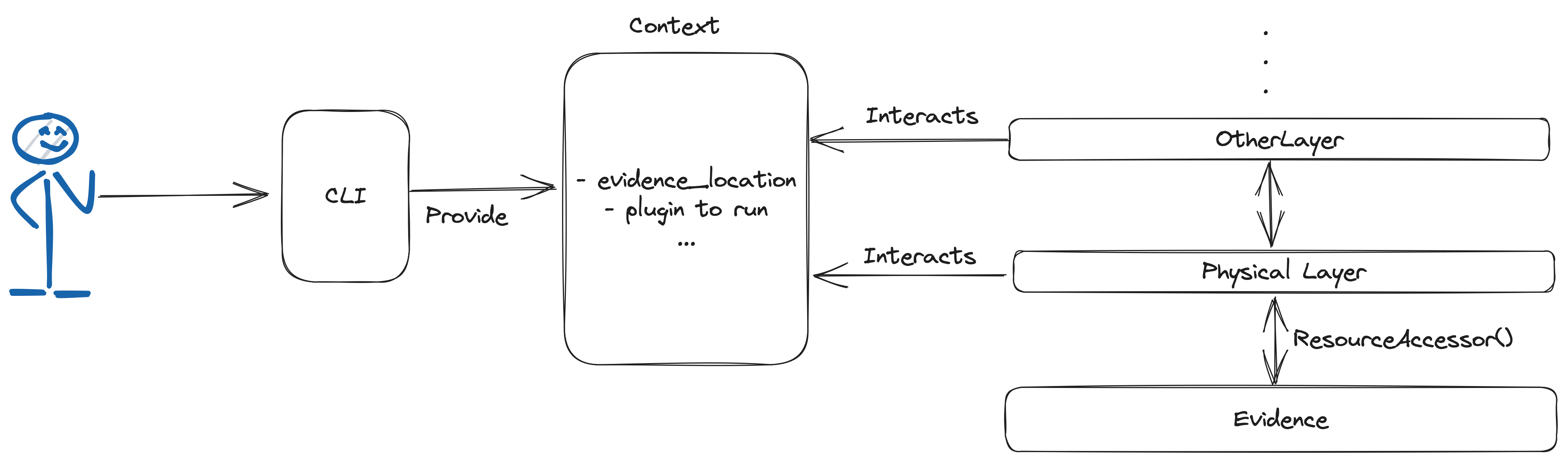

The native volatility3 framework currently provides one method for accessing data when performing the contextualization on a memory image which is direct access on a traditionnal filesystem.

When an analyst is using volatility3 to extract data using a plugin, the framework is building the context “behind the scenes”, which is the most critical step in the memory forensics workflow aside from the aquisition and analysis steps. This step is too often overlooked/forgotten by some investigators.

In the framework, the data contextualisation is made via “layering” and “stacking”. Each layer in volatility3 focuses on a particular aspect of memory forensics, such as parsing specific data structures, analyzing various kinds of artifacts, or understanding specific evidence formats. The layers can be seen as individual building blocks that can be stacked on top of each other in a specific order to build the right context before extracting data for analysis. As an example, a memory image with the “vmem” format will induce the stacking of the “VmwareLayer” to be able to read such file format and extract data.

One of the layer is called the “FileLayer” or “physical”. One of the usage of this layer by the framework is to know how to locate, open, read and overall access resources of the evidence provided by the investigator. The FileLayer is using what is called a “ResourceAccessor” to interface with the physical evidence.

Adding bucketS3 support to the FileLayer Link to heading

The FileLayer currently support one type of resource accessor which is the file:// and http(s):// url patterns. They are used to read the evidence and remote pdb files by using the urllib python3 library.

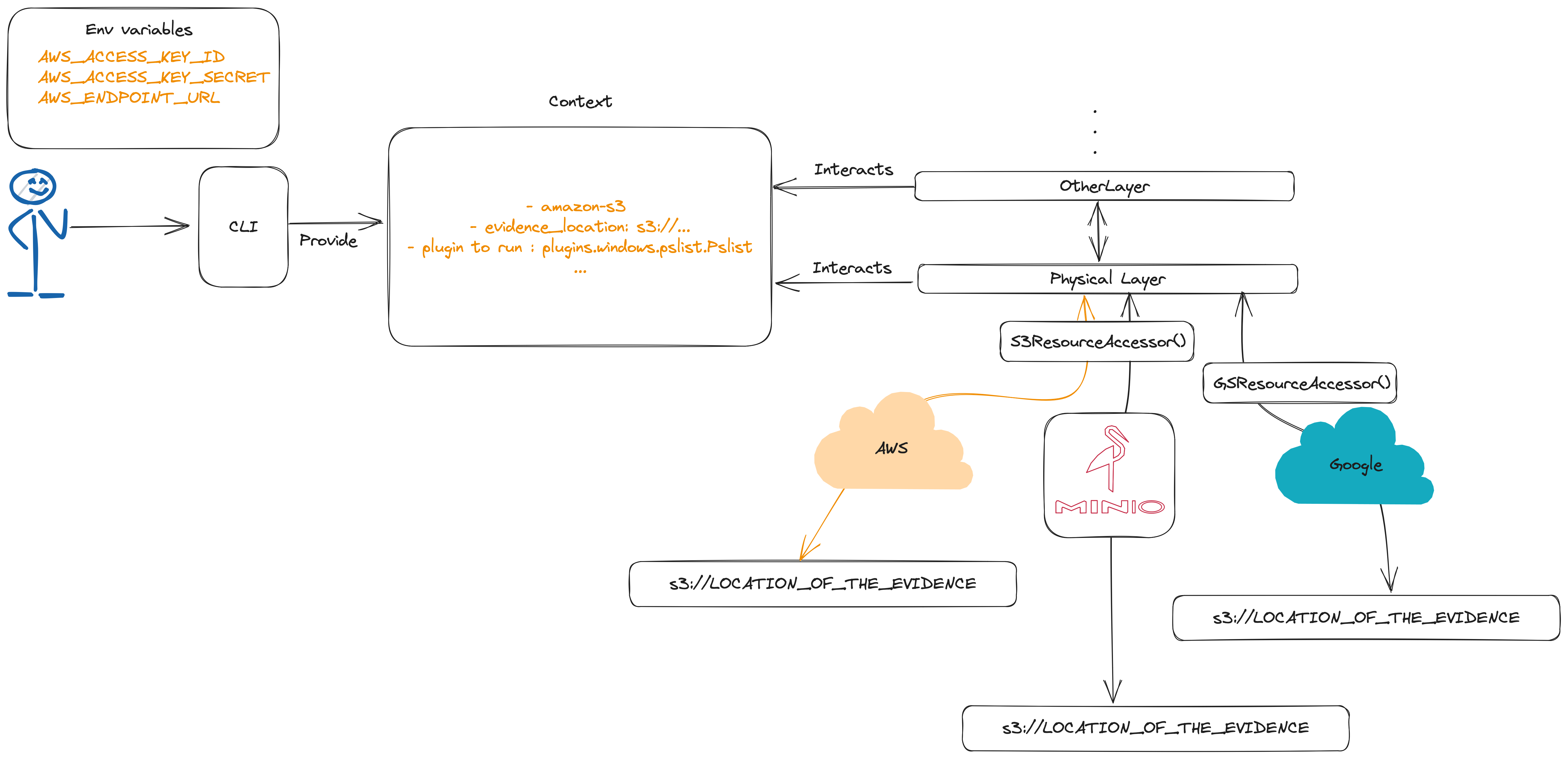

By giving the possibility to the analyst to indicate that the evidence as to be read from remote object storage we can pass this information to the context and create an other resource accessor.

Here is what was done to make this possible :

Adding new mutually exclusive argument group to the framework CLI : “-s3/–amazon-bucket”/"-gs/–google-bucket"

group = parser.add_mutually_exclusive_group()

group.add_argument(

"-s3",

"--amazon-bucket",

action='store_true',

help="The file provided will come from an amazon bucket",

)

group.add_argument(

"-gs",

"--google-bucket",

action='store_true',

help="The file provided will come from a google bucket",

)

Next the CLI will check that the required environment variables are present to reach the bucket requested. Here is an example for Amazon S3/Minio.

if args.amazon_bucket:

# First check if the global variables are set and set the cloud location

try:

aws_access_key_id = os.getenv("AWS_ACCESS_KEY_ID")

aws_secret_access_key = os.getenv("AWS_SECRET_ACCESS_KEY")

endpoint_url = os.getenv("AWS_ENDPOINT_URL")

if aws_access_key_id is None:

raise ValueError("AWS_ACCESS_KEY_ID environment variable is not set")

if aws_secret_access_key is None:

raise ValueError("AWS_SECRET_ACCESS_KEY environment variable is not set")

if endpoint_url is None:

raise ValueError("AWS_ENDPOINT_URL environment variable is not set")

except ValueError as excp:

parser.error(str(excp))

except KeyError as excp:

parser.error(str(excp) + " environment variable is not set")

ctx.config["Cloud.AWS"] = "True"

When stacking the various layers and attaching them to a specific requirement, the FileLayer is stacked :

physical_layer = physical.FileLayer(

new_context, current_config_path, current_layer_name

)

new_context.add_layer(physical_layer)

Notice that the FileLayer object is created. We need to enhance the FileLayer object in order for it to determine the right resource accessor by looking at the context configuration:

class FileLayer(interfaces.layers.DataLayerInterface):

"""a DataLayer backed by a file on the filesystem."""

def __init__(

self,

context: interfaces.context.ContextInterface,

config_path: str,

name: str,

metadata: Optional[Dict[str, Any]] = None,

) -> None:

super().__init__(

context=context, config_path=config_path, name=name, metadata=metadata

)

if "Cloud.AWS" in self.context.config and not "pdbreader.FileLayer.location" in self.context.config:

self._accessor = resources.S3ResourceAccessor()

elif "Cloud.Google" in self.context.config and not "pdbreader.FileLayer.location" in self.context.config:

self._accessor = resources.GCResourceAccessor(self.context)

else:

self._accessor = resources.ResourceAccessor()

[...]

Then, we define our custom resource accessors, the current accessors added are supporting AWS/Minio and Google Cloud buckets. Notice the use of s3fs2 and gcsfs3 libraries.

[...]

import s3fs

import gcsfs

[...]

class S3ResourceAccessor(object):

def __init__(

self,

):

self.s3 = s3fs.S3FileSystem()

def open(self, url: str, mode: str = "rb") -> Any:

return self.s3.open(url, mode)

class GCResourceAccessor(object):

def __init__(

self,

context: interfaces.context.ContextInterface,

):

self.s3 = gcsfs.GCSFileSystem(project=context.config['Cloud.Google.project_id'], token=context.config['Cloud.Google.application_credentials'])

def open(self, url: str, mode: str = "rb") -> Any:

return self.s3.open(url, mode)

Testing Link to heading

It is now time to test the modified framework. Memory images between 1 and 8 gb were used for those tests.

On-Premise MIN.IO Link to heading

~» export AWS_ENDPOINT_URL=http://127.0.0.1:9000

~» export AWS_ACCESS_KEY_ID=user

~» export AWS_SECRET_ACCESS_KEY=password

~» vol -s3 -f s3://20acf5e8-ab93-42ff-b87a-15065da1016c/Snapshot6.vmem windows.pslist --dump --pid 244

Volatility 3 Framework 2.4.1

Progress: 100.00 PDB scanning finished

PID PPID ImageFileName Offset(V) Threads Handles SessionId Wow64 CreateTime ExitTime File output

244 4 smss.exe 0xfa8002c33b30 2 29 N/A False 2020-12-27 06:18:43.000000 N/A pid.244.0x48080000.dmp

AWS remote s3 bucket using volshell Link to heading

~» export AWS_ENDPOINT_URL="" # no endpoint url will point to aws by default

~» export AWS_ACCESS_KEY_ID=REDACTED

~» export AWS_SECRET_ACCESS_KEY=REDACTED

~» volshell -s3 -f s3://20acf5e8-ab93-42ff-b87a-15065da1016c/Snapshot6.vmem -w

Volshell (Volatility 3 Framework) 2.5.0

Readline imported successfully PDB scanning finished

Call help() to see available functions

Volshell mode : Windows

Current Layer : layer_name

Current Symbol Table : symbol_table_name1

Current Kernel Name : kernel

(layer_name) >>> from volatility3.plugins.windows import pslist

(layer_name) >>> pslist.PsList.get_requirements()

(layer_name) >>> display_plugin_output(pslist.PsList, kernel = self.config['kernel'])

ID PPID ImageFileName Offset(V) Threads Handles SessionId Wow64 CreateTime ExitTime File output

4 0 System 0xfa80024b3890 83 519 N/A False 2020-12-27 06:18:43.000000 N/A Disabled

244 4 smss.exe 0xfa8002c33b30 2 29 N/A False 2020-12-27 06:18:43.000000 N/A Disabled

332 324 csrss.exe 0xfa8002e79b30 9 562 0 False 2020-12-27 06:18:49.000000 N/A Disabled

384 376 csrss.exe 0xfa8003a0f8a0 10 171 1 False 2020-12-27 06:18:50.000000 N/A Disabled

392 324 wininit.exe 0xfa8003a12060 5 80 0 False 2020-12-27 06:18:50.000000 N/A Disabled

428 376 winlogon.exe 0xfa8003a31060 5 120 1 False 2020-12-27 06:18:50.000000 N/A Disabled

Google cloud bucket Link to heading

~» export GOOGLE_PROJECT_ID=REDACTED

~» export GOOGLE_APPLICATION_CREDENTIALS=/PATH/gcreds.json

~ » vol -gs -f gs://volweb/Snapshot6.vmem windows.dlllist

Volatility 3 Framework 2.4.1

Progress: 100.00 PDB scanning finished

PID Process Base Size Name Path LoadTime File output

244 smss.exe 0x48080000 0x20000 smss.exe \SystemRoot\System32\smss.exe N/A Disabled

244 smss.exe 0x777e0000 0x1a9000 ntdll.dll C:\Windows\SYSTEM32\ntdll.dll N/A Disabled

332 csrss.exe 0x49b80000 0x6000 csrss.exe C:\Windows\system32\csrss.exe N/A Disabled

332 csrss.exe 0x777e0000 0x1a9000 ntdll.dll C:\Windows\SYSTEM32\ntdll.dll N/A Disabled

[...]

Applications Link to heading

One can ask what are the potential applications after these new additions to the framework.

-

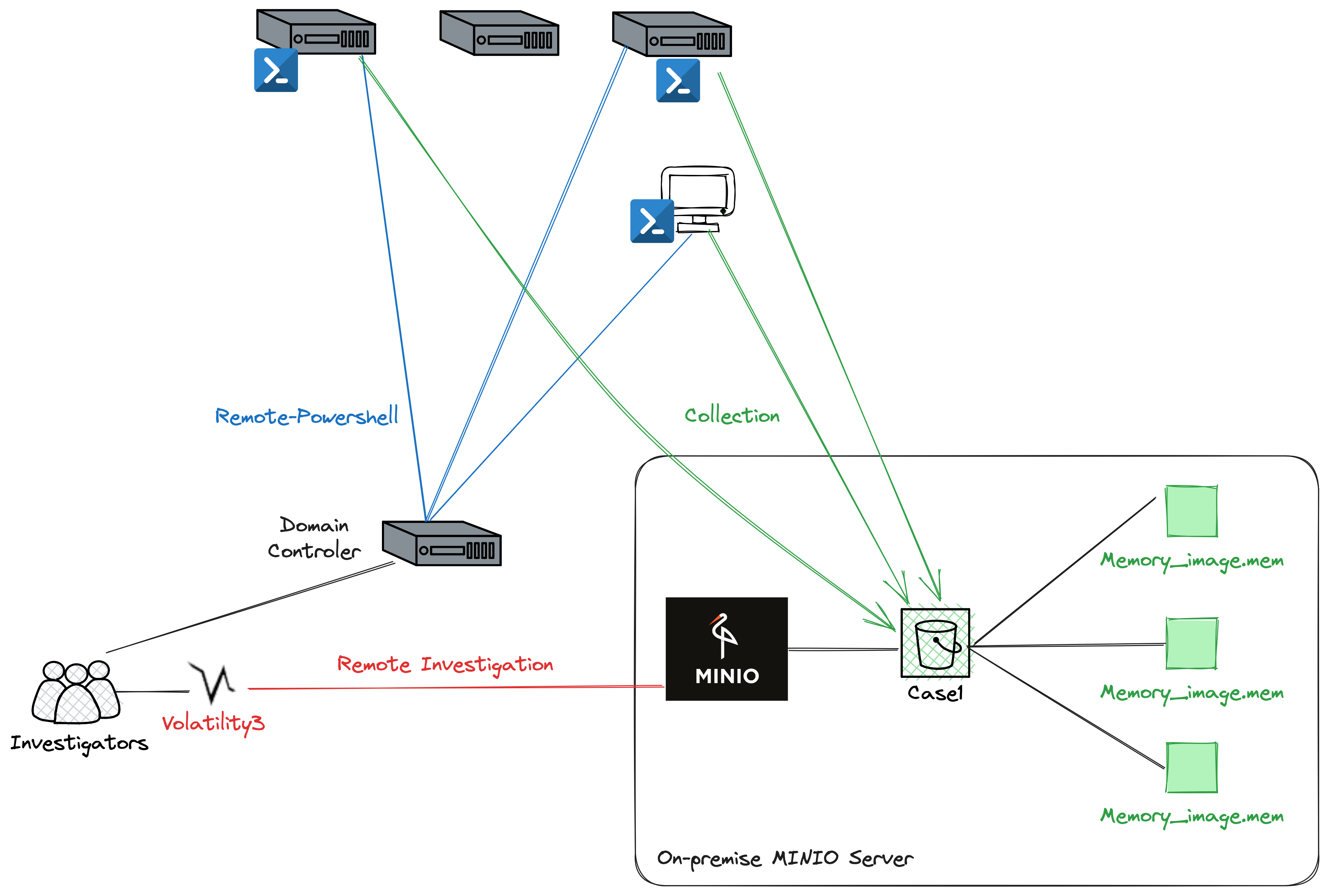

Remote investigation: Memory forensics can be performed on object storage without requiring physical access to the device hosting the storage. This allows for remote investigations, making it more convenient and efficient.

-

Scalability: Object storage over the network offers scalability, allowing the storage capacity to be expanded easily when dealing with large data volumes. This is particularly useful when performing memory forensics on extensive datasets.

-

Improved Collaboration: By conducting memory forensics on object storage accessible over the network, multiple IT specialists and forensic experts can collaborate on the investigation simultaneously. This promotes knowledge sharing and enhances the chances of identifying critical evidence.

-

Memory Acquisition at scale : One can script memory acquisition and deploy evidence collection on multiple system by storing the result into an On-Premise solution like Minio or in a cloud solution like AWS.

-

No third party installation : Some people don’t like to have “agent-base” solutions installed on their assets to perform memory forensics. Once the evidence are acquired, memory forensics will be performed on a dedicated environment.

Conclusion Link to heading

To conclude, the goal of this addition to the volatility3 framework is to give more capabilities to digital investigators and incident responders when performing memory forensics. The fork of the project implementing this feature is available for you to review the code : https://github.com/forensicxlab/volatility3/tree/feature/bucket-s3 .

Like all solutions, there are downsides to remote memory forensics. Indeed performing remote memory forensics will have an impact on performances and the investigator need to choose wisely about the solution used. However with cloud-computing network performances, network memory forensics can be very efficient. In a futur blogpost, a concrete application of remote memory forensics will be demonstrated by introducing VolWeb 2.0 which is still in development at the time of writing of this article.

Do not hesitate to reach me at felix.guyard@forensicxlab.com to enhance this article or to comment on the integration to the volatility framework.